Nominations for Humphrey Software Quality Award Open Through September 1

May 16, 2024 • Article

The award recognizes improvement in an organization's ability to create and evolve high-quality software-dependent systems.

Read More

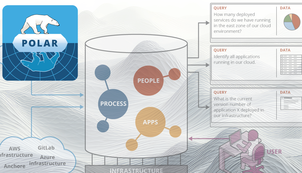

New SEI Tool Brings Visibility to DevSecOps Pipelines

May 06, 2024 • Press Release

The Software Engineering Institute today announced the release of a tool to give a comprehensive visualization of the complete DevSecOps pipeline.

Read More

IEEE Secure and Trustworthy Machine Learning Conference Awards SEI Researchers’ Trojan Finding Method

May 02, 2024 • Article

Their method uses off-the-shelf tools and generative AI to visualize hidden adversarial images in computer vision models.

Read More

2023 Year in Review Highlights Impact of SEI Research and Development

April 15, 2024 • Article

Significant projects in 2023 included work on generative AI, vulnerability management, zero trust, large language models for intelligence, and more.

Read More

International Conference on Conceptual Modeling ER 2024 Opens Call for Papers

March 25, 2024 • Article

The long-running event is accepting submissions on how artificial intelligence and machine learning are revolutionizing conceptual modeling.

Read More

SEI Event to Collaborate on Approaches to Improve Software Security

March 13, 2024 • Press Release

The CERT Division of the Carnegie Mellon University Software Engineering Institute (SEI) today announced registration and a call for presentations for Secure Software by Design, a two-day, in-person event on August 6 and 7 at the SEI’s Arlington, Va., location.

Read More

SEI and OpenAI Recommend Ways to Evaluate Large Language Models for Cybersecurity Applications

February 21, 2024 • Article

A new white paper says evaluation of LLM capability and risk should include real-world cyber scenarios, not just factual knowledge tests.

Read More